BRIEF

The potential of the surface lies in image visualization or imaging that allows humans to understand and interact with the world. The surface, then becomes a holder of information open to human perception. Technology provides “machine” vision based on metrics and trained models that can perceive and relay information in their own unique way.

We are investigating AI’s capability to generate and reimagine the potentials of what digitally "readable" images of spaces and surfaces could mean for the physical world, not just to image information that humans perceive, but to store information that is readable through machine vision. A digital aesthetic catered to the machine can be merged with the human aesthetic through collaborative design between humans and generative AI, creating surfaces that can be legible to both human and machine vision. We aim to harness the synthetic imagination of generative AI to envision new possibilities of the future, where data-encoded patterns are merged with physical surfaces.

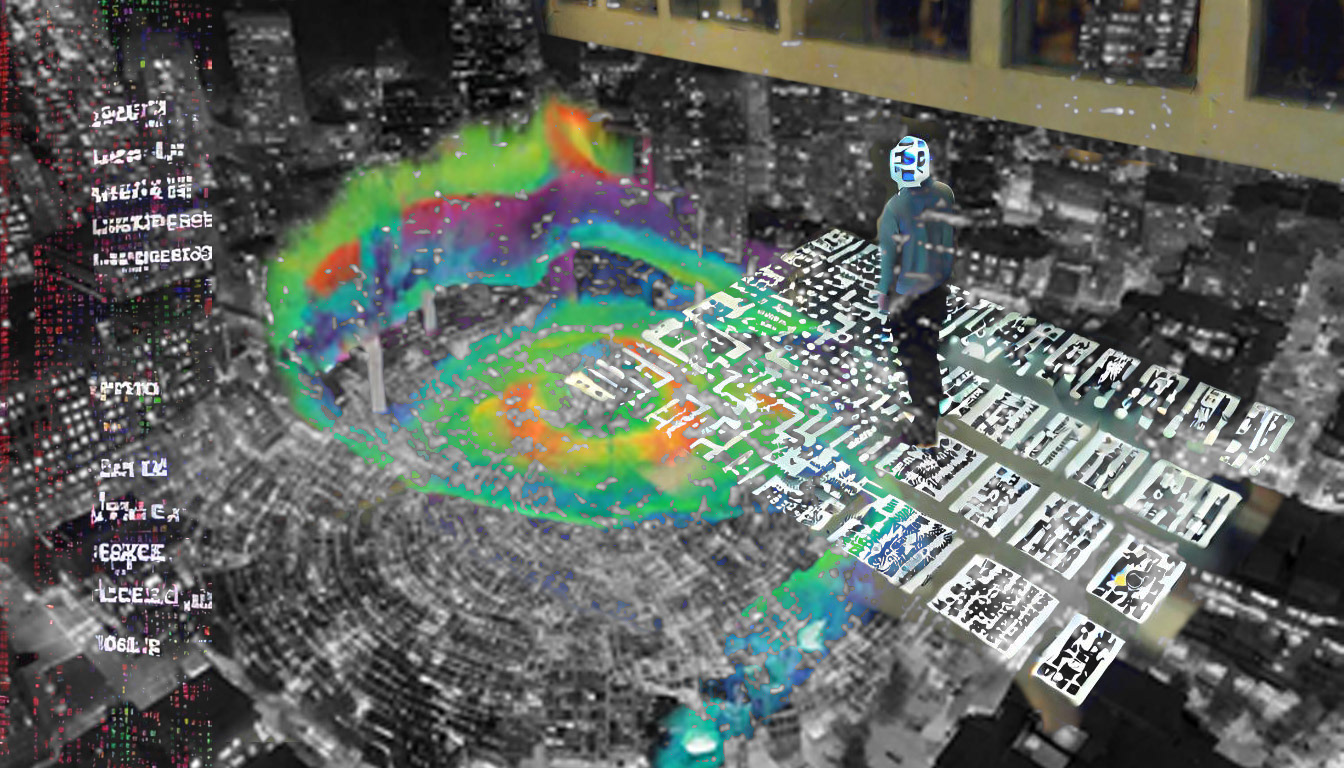

While investigating the notion of data encoded surfaces, our research also led us into speculating and imagining the idea of building surfaces being composed of technologies allowing it to scan, interact and respond to its environment.

Denise Scott Brown and Robert Venturi’s Learning from Las Vegas acted as a conceptual anchor in informing the way we were thinking about exterior building surface. We envision a speculative future where the surface serves as a dynamic reserve of information. The surface becomes the threshold between virtual and physical landscapes, offering an immersive visualization of information that is accessible through a unified interface (the surface), revolutionizing how individuals navigate and interact within urban settings.

The potential implications of data encoded and embedded surfaces will fundamentally change the way humans and machines interact with the urban environment. The transparent access to information blurs the boundaries of what exists as a private or public space - creating a bridged in-between of space. For architects, it challenges notions of how we understand building programs, circulation, and private versus public space, and provides a new perspective on how urban environments can be designed in the future.

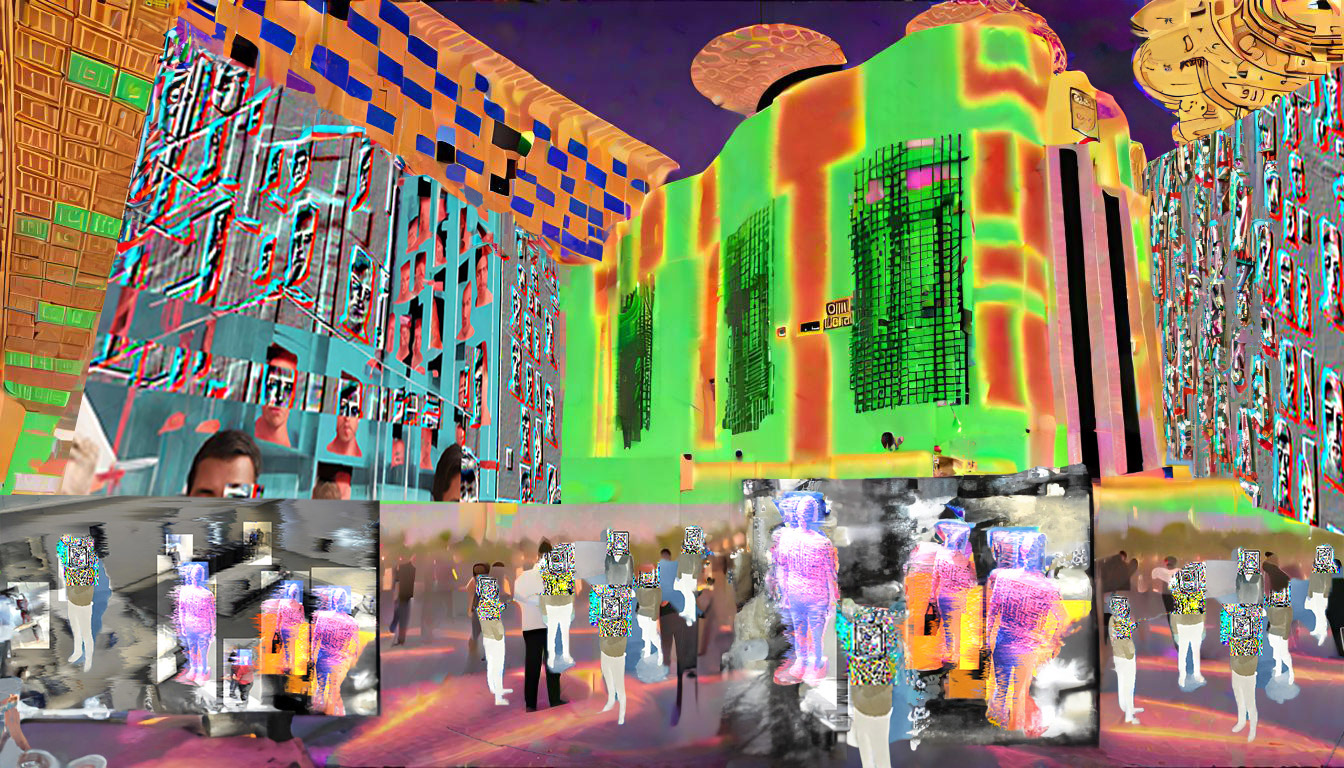

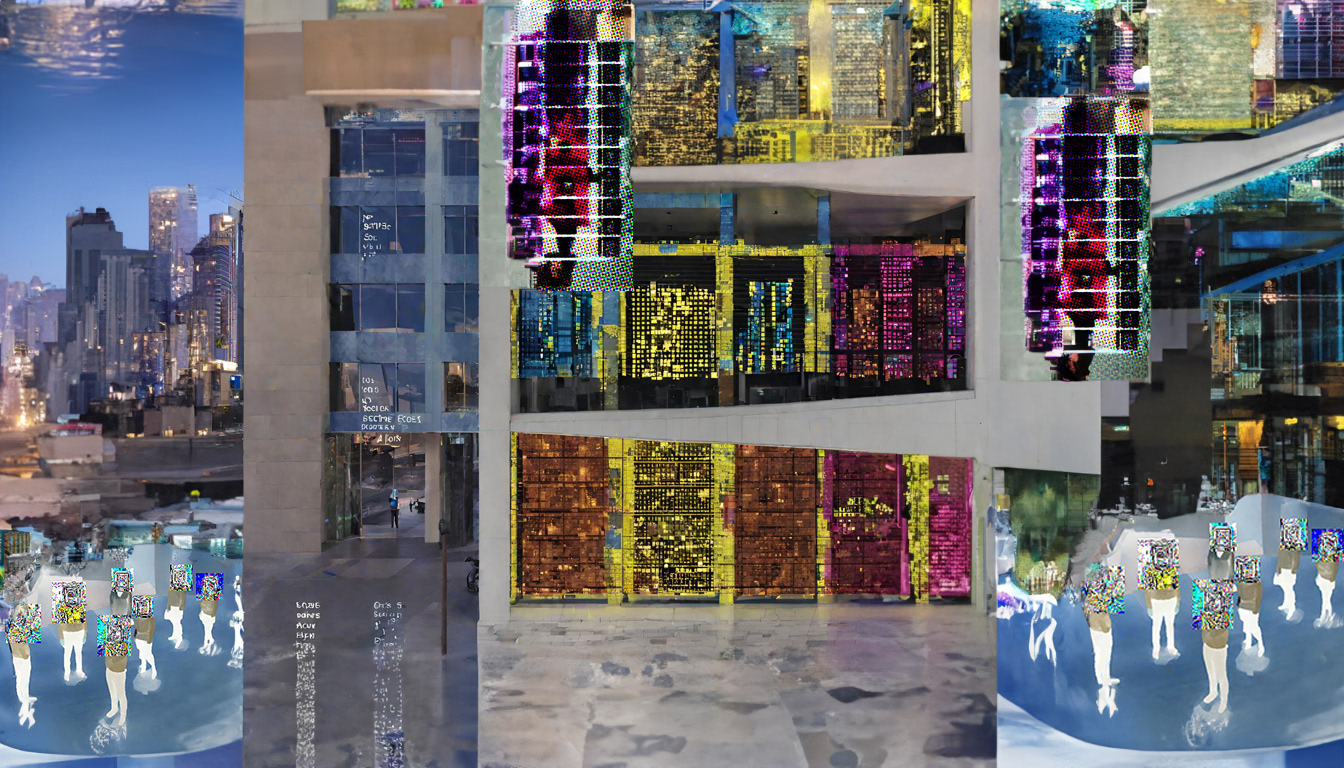

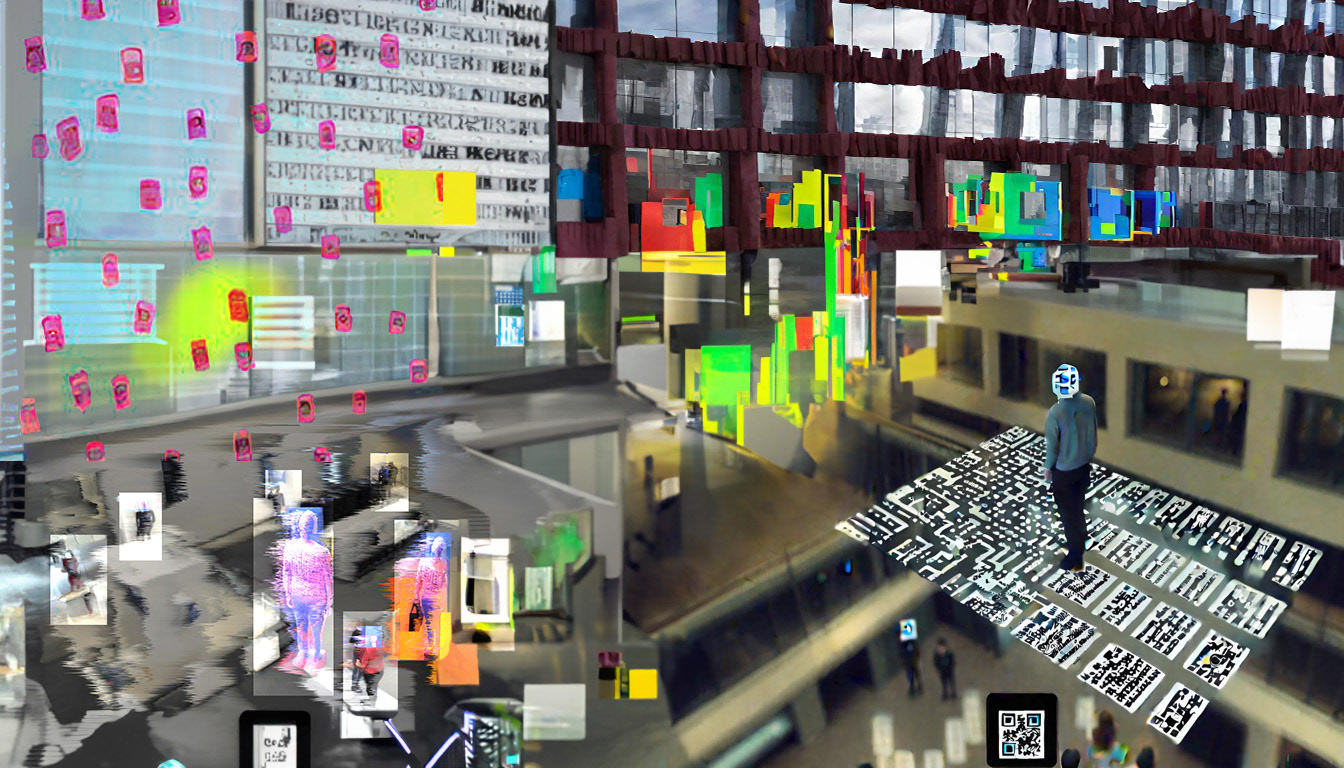

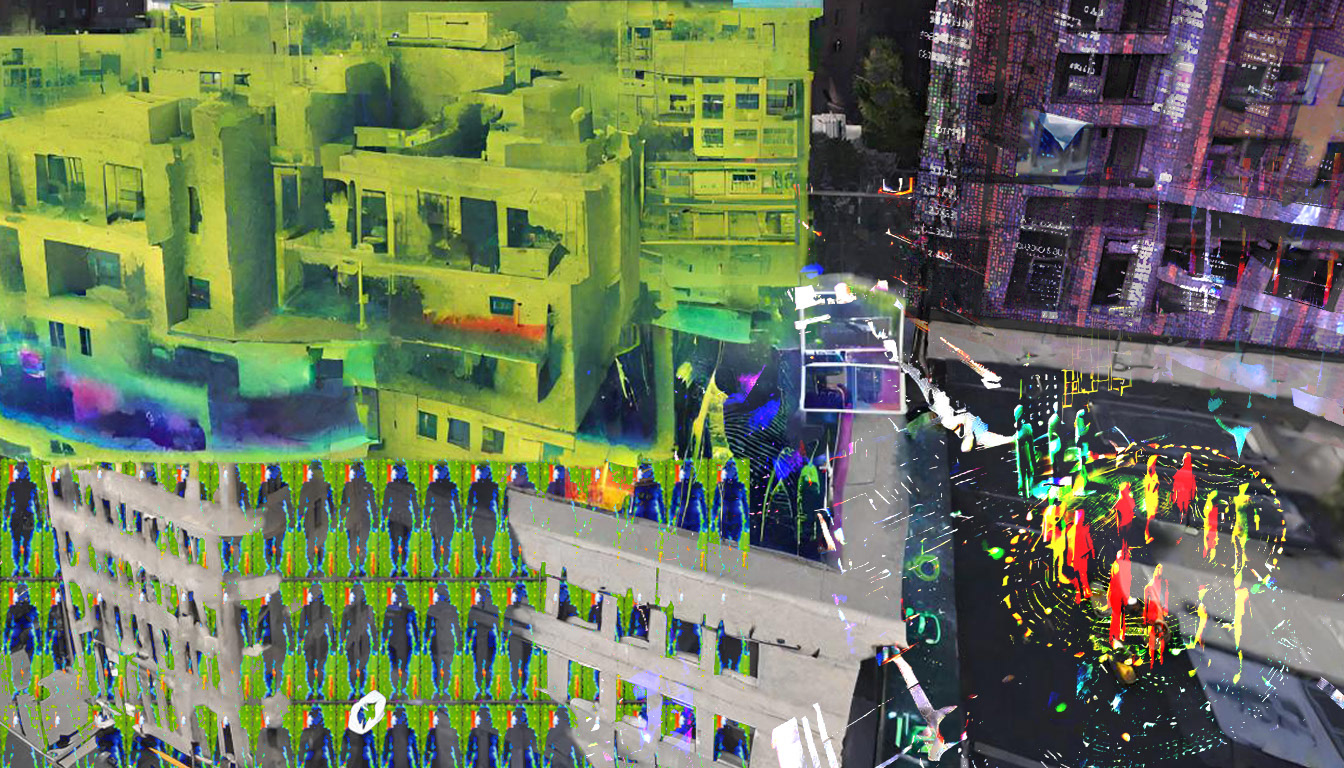

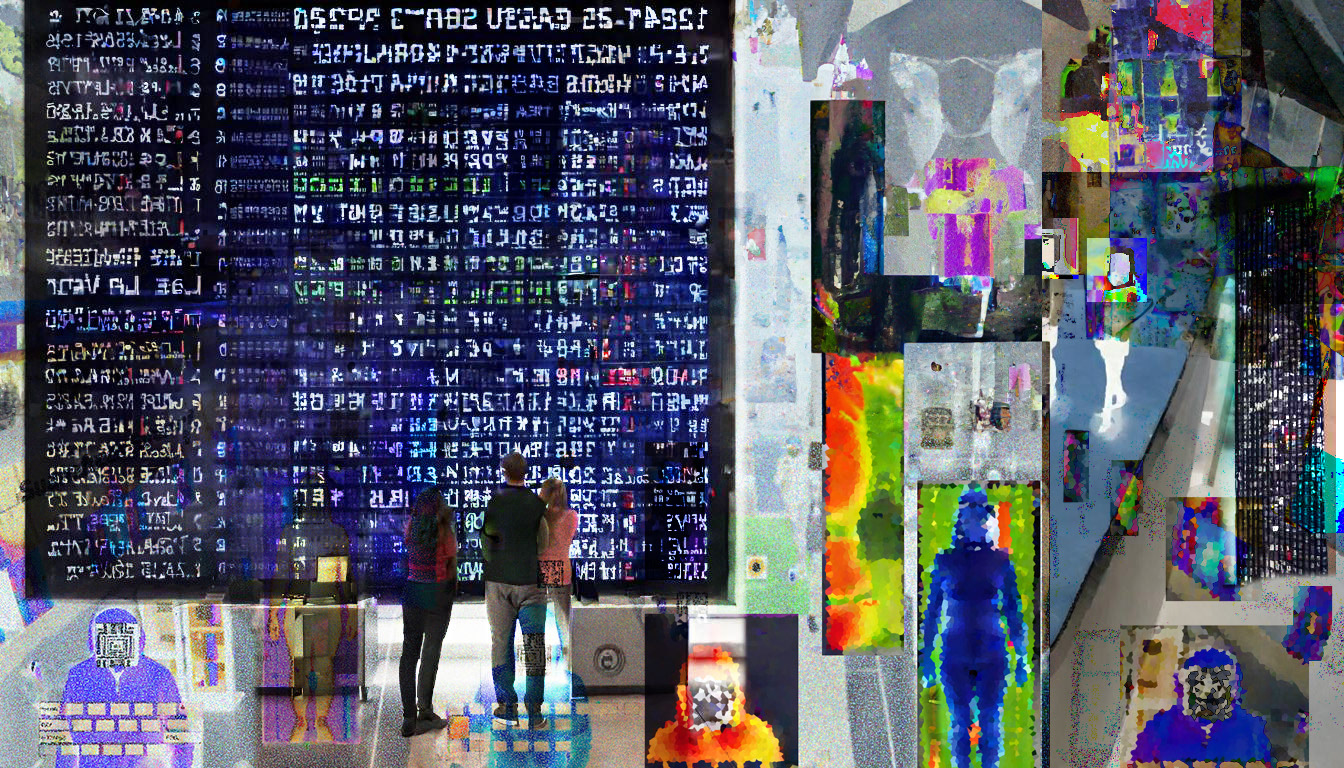

These series of images speculate on the interactions between humans and machines in a newly imagined and combined aesthetic of a Bridged Reality. This merged environment imagines the potential between these technologies interacting with one another showing how surfaces of the city may evolve. This amalgamation, where information systems are in a constant flux, distorts and abstracts the boundaries between data, the environment, and people.

HUMAN VISION

In the first video, we imagine that humans are able to scan a data-embedded environment. Imagine a future where data is seamlessly embedded within the fabric of our cities, creating environments that blur the line between the physical and digital realms, public and private spaces, etc. Join us as we speculate how buildings can become transformative, morphing entities, reacting to real-time changes in data and information about the surrounding environment. Our increasingly augmented capabilities can allow us to decipher and interact with data within these embedded surfaces. We are exploring how components of existing scannable codes can be extracted to generate new codes and new layers of meaning. With an ever-evolving future of technology ahead of us, there is room for a new aesthetic to be established for the surfaces around us.

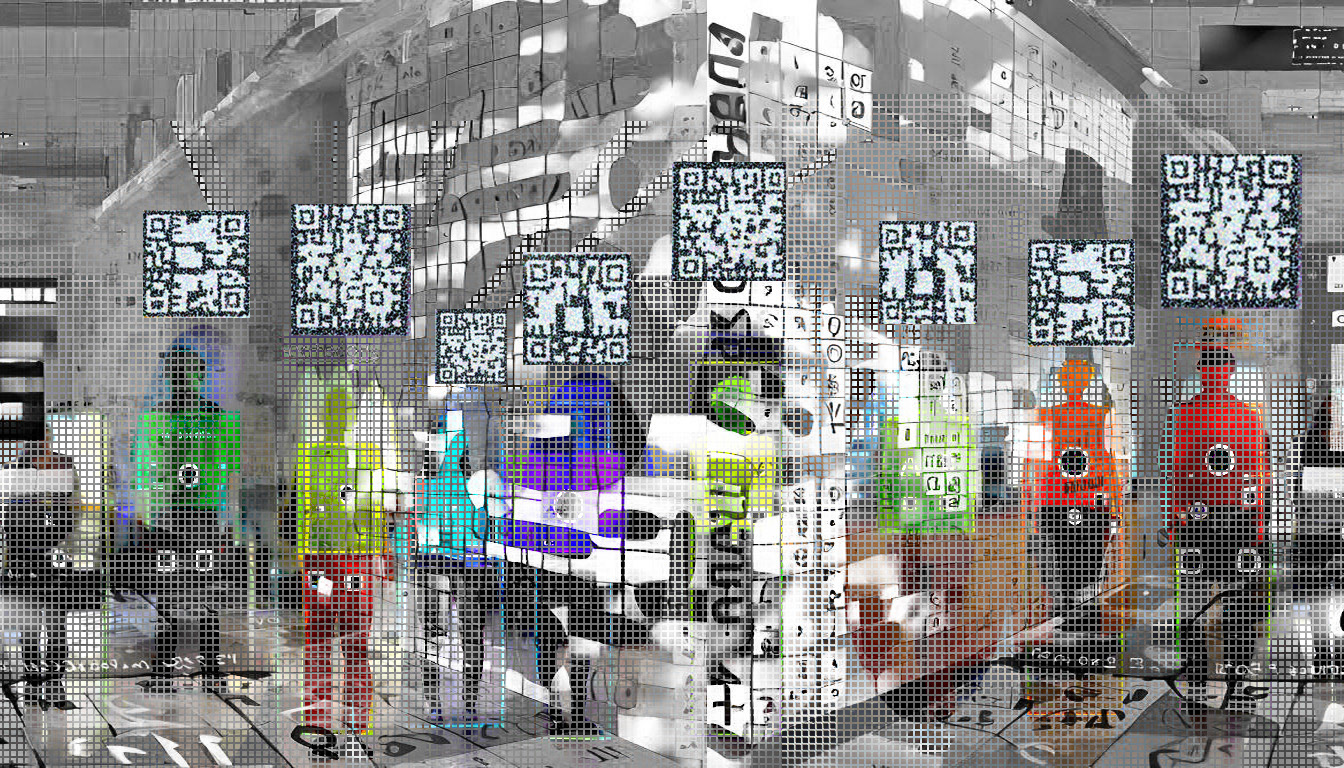

MACHINE VISION

“Machine Vision,” where buildings read the environment, we speculate on buildings’ technological potential to become active participants in their surroundings. With the collaboration of humans and the synthetic imagination of generative AI, we begin to imagine an aesthetic and explore the various implications and effects of its vision on our social interactions and environment. Machine vision differs in the way it interprets the environment, and as humans see through the machine perspective, they may discover new datasets that the surface can present. Our investigation looks to imagine and speculate on this aesthetic to unlock possibilities for designers to see new possibilities for what the surface can be.

To talk a little bit about the process of generating these images, we began by identifying technologies with distinct aesthetics. For example, we started with scanning/mapping. Once a few of these technologies were identified, we compiled and put these images into a training model in Runway. The style generator is used to train on the visual aesthetic of a set of images, prioritizing the "look" and feel of a visual creation. By training an AI model with about 30 curated images, we allow the aesthetics of the technologies we know and have now, as a dataset for the possibility of AI to speculate on a new aesthetic.

CONCLUSION

The project is a speculative imagination of what the fabric of cities may look like, where the elements of cities constitute a new bridged materiality that visualizes the aesthetics of scannable surfaces. Surface conditions are interactive elements responding to stimuli in the environment. Buildings are experienced as dynamic and transformative - changing based on the data that informs them. This synthetic vision redefines the urban fabric, it envisions cities where data-encoded patterns not only embed surfaces but shape the way humans perceive, interact, and inhabit our built environment.

As we envision a future where these surfaces can hold vast amounts of information, we recognize the far-reaching implications for architectural design and urban planning in terms of privacy, information transparency and access, and private vs public spaces. Through our collaborative efforts with generative AI, a synthetic aesthetic emerges that encompasses both human and machine vision, blurring the physical and digital landscapes - creating a bridged reality. The existence of scannable surfaces and their role in shaping and informing interaction in our cities will fundamentally change the way we design, inhabit, and understand the environment.

IMPLICATIONS OF TECHNOLOGIES

These technologies cover a broad range of methods to embed data into surfaces and the visualizations of those surfaces. Barcodes, QR codes, Data matrices and geospatial grids inspire an aesthetic that is rooted in the way a machine can perceive and read information - anchor points, patterns, and certain digital masks?. These become apparent in the first set of visualizations, where the code and the site become one and the same. These are direct projections of a language perceived by machines into our environment. The data of the environment drives the aesthetic of the city - resulting in surfaces that facilitate in conveying certain information to humans and certain information to machines. Human and machine interaction with the environment are then a product of the information that is embedded and reflected in the building surface. Information regarding what people are experiencing within the building - how many rooms are available, what the temperature is, how much money are people winning/losing, etc. are the kinds of information that could potentially be available to people as they interact with the world.

Machines can exhibit biases similar to humans. These biases can lead to various outcomes for different groups. The use of surveillance and categorization tools based on historical and current behaviors raises points for considerations regarding ideas of privacy, expression, and access. These factors can be speculated to influence the way individuals and communities interact with the built environment either perpetuating or dismantling societal norms and structures.

1) QR CODES

We saw the QR code as a figure-ground condition on the elevation of surfaces. Just as conventional figure-ground images convey certain information (like the Nolli map), the elevation of these surfaces will hold data in the patterns to convey information. AI assists us in rapidly establishing different combinations of figure-ground patterns that can be reimagined and systemized to convey real-time data. The visualizations created speculate on the aesthetic and the implications of extracting the principles of how these codes are read - contrast, saturation, pattern and these become the driving design principle in an urban context. When applied to the surface of the built environment, elements of the figure-ground can be embedded with data that is readable to the machine. AI assists us in rapidly establishing different combinations of figure-ground patterns that can be reimagined and systemized.

2) JAB CODES

The incorporation of color in the JAB code enables it to encode more information within the same area compared to traditional black-and-white codes like a QR code. (Also known as data matrix codes. Jab codes are a version of a scannable code that is more easily accessible and easier to scan with our conventional devices. Using jab codes on facades could allow for more design flexibility because of a higher margin of error. Color and pixels play a big part in its design.

3) GPS

Geolocation allows us to track devices and their coordinates on a 2d plane. With the growing vertical landscape, there is much more surface to the fabric of the city than merely what can be seen from above. By mapping geolocation grids on the vertical planes of building facades, people and devices can be pinpointed on a vertical axis as well. These visualizations allow for the vertical surfaces of our built environment to encode location based information that then becomes accessible to human and machine users when scanned.

4) LIDAR

Lidar scans and point clouds offer high accuracy that allows for more versatile design decisions and an ability to cover large areas. Because of this, lidar is easily integrated with other geospatial datasets, and can be combined with other code systems such as GPS and satellite locations. Note that lidar and geolocation can work together, so that Locations of people, program, entrances, exits all become more accessible and available to the user.

5) SCANNING

Scanning typically provides a detailed three-dimensional visualization of the environment. It displays millions of tiny points, each representing a laser return plotted according to its position in 3D space. This creates highly accurate mappings of our environment, including shape, elevation, density and surface textures. This data can be applied to topographic mapping, urban planning, autonomous vehicles, and more, which all help us navigate and learn more about our environments.

6) OBJECT DETECTION

Object scanning provides us with a different view of how machines recognize objects. A human sees an object in terms of depth, cultural meaning, and individual memory. A machine sees an object in terms of color, contrast, and values. The meshing of these two styles of design creates a new understanding of objects and their place in space/ our environment.

One of the most recognizable visual features of object detection systems is the use of bounding boxes. These are typically rectangular overlays that enclose the detected objects within an image or video frame.

7) FACIAL RECOGNITION

Facial recognition uses a combination of computer technology and visual design to identify or verify people from photos or videos. A visual overlay is placed on a detected face, including lines, points, or boxes highlighting key facial features like eyes, nose, mouth, and the outline of the face. By using facial recognition software, buildings can recognize humans and categorize them according to their needs.

The building can offer or decline admission, open up facilities, improve accessibility, catered to a specific individual or the masses at large. From the trained model, the images that were generated were turned into videos through image to video generation, along with a text prompt.

8) X RAYS

The last technology is X-ray images are typically in shades of black, white, and gray. This simplifies the image and allows for detailed examination of the structures within an object or environment, as not necessarily objects we identify, but as different densities or materials. Details and objects are “seen” by the machine through its ability to absorb xrays, depending on its texture or density, creating a high contrast, skeletal aesthetic that can not only extract certain figures over others, but also layer objects in a transparent way that creates depth that humans can’t perceive.

Please visit our archival website for more information.

GROUP MEMBERS:

Arpitha Cunigal

Caroline Ditzler

Kaylen Choi

Lauren Li

Rebecca R. Ramirez

Ruqaiyah Bandukwala

Caroline Ditzler

Kaylen Choi

Lauren Li

Rebecca R. Ramirez

Ruqaiyah Bandukwala